Selwyn, our in-house serverless expert, recently described how edge workers are on the rise. Besides building fast and accessible websites, he writes about the latest developments in web development on his own website. Below is a recap of his latest piece: Exploring the serverless edge.

The serverless trend

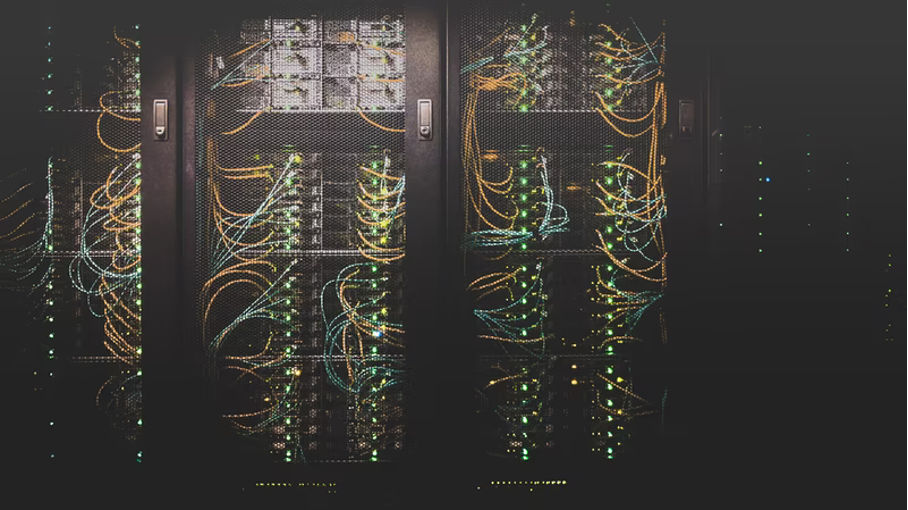

Cloud and serverless. The hottest terms of the last few years. Serverless can be summarised as outsourcing the infrastructure to a cloud provider. Where previously you had to provide your own server to run your software, suddenly someone else can do this for you. A newer trend is edge computing. This allows for even faster data processing by doing this close to the end user via mobile services, IoT, sensors or autonomous systems.

How do edge workers help us front-end developers? And what’s the latest state of edge workers using our favorite programming languages JavaScript and TypeScript? Selwyn explains this in his latest post, which you can read on Selwyn’s personal blog, or down below.

Exploring the serverless edge

Serverless functions have been around for years but their ‘surroundings’ are still in flux. With a focus on developer experience: Firstly by making them easy to run and test locally by emulating the production environment. Secondly by integrating with Git, using automatic production and pull request deploys. Thirdly with service combinations, like static file hosting and analytics. These together make serverless functions more useful, but edge workers are starting to catch up.

Before diving in, let me clear up the two terms. With serverless functions I mean code paired with a runtime, both run on invocation. Think of services such as: Fn, AWS Lambda & Google Cloud Functions. With edge workers I mean code that runs in an within a specific runtime, for now only the V8 engine. Think of services such as: Deno Deploy, Cloudflare Workers & Netlify Edge.

Chilly serverless

Cold starts are a major downside of serverless functions, you have to wait for the runtime to start and, depending on the language, parse the code. Even a serverless JavaScript function with only a few lines of code drags around 50ms - 100ms of cold start. This number can go up quick when pulling in dependencies. On top of that you get network latency, the farther away from a user request the longer the latency. Though this is not a serverless downside per se it often is in practice. Most serverless function offerings only allow you to deploy to one region.

Ablaze edge workers

In contrast edge workers usually run close to a user request. And since the runtime is already running there is no cold or warm start. An invocation takes a few of milliseconds or less. The runtime API is comparable to Service Workers and Web Workers, embracing the Web APIs we love and hate. Whereas serverless functions have access to the underlying system and file system, edge workers communicate with web APIs like caches and events. As a result JS packages on npm that assume access to run in Node.js will not work. On top of that edge workers can not make raw TCP connections, so talking to a database like Postgres is out of question.

The bleeding edge

But last week Deno Deploy, an edge worker service, published Beta 3. Actually adding support for raw TCP connection support! I expect other edge worker services to follow suit but until then there is Prisma Data Proxy in early access. An edge worker can connect to the Prisma Data proxy over HTTP and in turn the proxy, acting as a connection pool, talks to a database.

With these changes it seems edge workers are catching up going beyond what a serverless function offers, exciting stuff!

More blogs?

Want to read more about serverless? Check out our blog post on serverless framework Svelte. Or see how cloud functions are a solution for overloaded servers. More blog posts by Selwyn? In earlier blog posts you can find how to set up a secure Linux server and how to install npm on Github Actions.