AI has been able to generate stunningly beautiful images for a while now, but recently, it has received a lot of media attention. It’s a controversial topic that has been popping up all over the place, especially in art communities.

At De Voorhoede, we use quite a few illustrations to communicate with the outside world. So far, all these illustrations have been drawn by hand, but can we use AI to generate them in our style? The short answer is yes, but let’s take a closer look at the technology and some examples.

The technology: Stable Diffusion

For this research we used Stable Diffusion, more specifically the Stable Diffusion Web UI. The web UI offers a collection of powerful tools, including the possibility to train models. No programming knowledge is required. We didn't write a single line of code.

Training a model from scratch requires a lot of computing power and time. Thankfully, we are able to train on top of existing models. This allows us to achieve great results with limited training data in a relatively short timespan.

Embedding vs hypernetwork

There are two types of layers that we can train: embedding and hypernetwork. Embedding layers are responsible for encoding the inputs. Essentially, it guides the model to produce images that match the user's input. You can use embedding layers to teach a model how to render new things like objects, animals, or a specific face.

Hypernetworks on the other hand allows Stable Diffusion to create images based on previous knowledge. You don’t teach the model anything new, you teach it how to “remember”. This means that when a user provides a new input, the model will try to remember what it did before in order to generate something similar to it. This is great if you want to consistently generate images in a single style.

The process: trial and error

Our knowledge of AI image generation was very limited when we started on this project. Because of this, the first training method we used was embedding, simply because it was easier and faster to train. Before we could start the training process, we needed to prepare our training data. In our case, we used 25 images, each image had an associated caption explaining its content. Here are a few examples:

Training the embedding layer

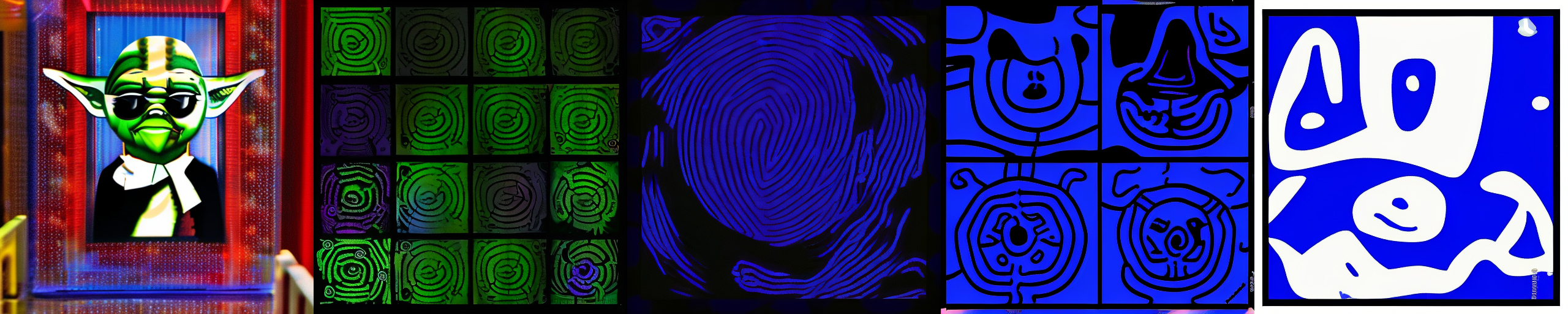

Once we had the training data ready, it was time to train the model. We trained the first version for about 24 hours, this gave us 5000 training steps. Every 25 steps, we generated an image to document the process. The image below shows the first, the last and a few in-between images. Can you guess the input? It was “half (yoda) person, line art and monochrome”.

Let’s be honest, the results are horrible.

In fact, the further we got, the worse the results became. The reason for this was a combination of different things:

- bad captions

- bad input

- wrong configuration

- and the training method itself

Embedding layers are great for teaching models how to render something new, but that wasn't our goal, we wanted to consistently generate images in our style, we never meant to teach it something new.

Training the hypernetwork layer

After learning from our previous mistakes, we gave hypernetworks a try. Training a hypernetwork is a lot slower than training an embedding layer. For the second version we trained 10000 steps and that took about 72 hours. The image below shows the training process.

As you can see, this gave us much better results, and more importantly it gave us consistent results. This time, the input used was “(line art | vector) a portrait of a woman”.

Voor het afspelen van deze video is het delen van informatie met YouTube vereist.

https://www.youtube.com/watch?v=tYP_U4VqcR0The video above shows the rendering process in a visual way. Below are a few examples of generated images. The image on the left was drawn by hand, the image in the centre was generated by our trained model, and the image on the right was generated by the default, untrained model.

The results: lessons learned

As you can see, the model is able to generate images in our style:

- they all have curvy lines

- the lines have multiple widths

- they are all blue on white

However, not every generated image looks good, while the style is correct, they are not something that our illustrators should draw.

There are a few things that we could have done to get better results. For example, the original model that we used is very generic, it’s great at rendering digital art and photorealistic images but not that great at rendering vector based images. Using a model that focuses on vectors or cartoons should get us better results, but that is something that we learned afterward.

How do our illustrators feel about AI?

All the illustrations of De Voorhoede are custom-made by our two colleagues, Anne and Joan. We are very curious about their opinion, since their viewpoint is way more valuable than giving a personal or biased opinion on the technology and the achieved results. We asked them; how do you feel about AI generated illustrations?

Anne: “It’s impressive what AI can do with proper training, especially when creating high fidelity photorealistic compositions and remixes of existing material. But a large part of creating pretty much anything is what happens during the process of creation. There’s a free flow of ideas, inspiration, new thoughts, trying out different techniques, mixing up old and new. This is far from a linear process. AI-generated art takes a prompt and all kinds of settings, but the output will always be a direct result of that and never something unexpected.

Of course, the illustrations on our website are hardly a work of art, so the idea of letting them be generated is a pretty good one. However, the AI can’t really figure out where to add little details, where to keep it sketchy, how to make it playful, or how to fit in a new illustration with the existing library in regard to style and concept. The results are static and humorless. Robots 0, humans 1!”

- Anne, Lead Front-end developer and illustrator at De Voorhoede

AI can’t really figure out where to add little details, where to keep it sketchy, how to make it playful.

Joan: “Can I stop creating illustrations for De Voorhoede and rely solely on AI? In short, the answer is no.

While AI technology has advanced in many ways, it is not yet advanced enough to completely replace the creativity and skill required for creating illustrations. AI can be a useful tool for inspiration when experiencing artistic block, but it is not capable of creating specific illustrations with the same level of detail and nuance.

Using AI for more abstract drawings, such as a plant or a cat, can save time and effort, but it is not a substitute for the unique perspective and style required to create a specific illustration.”

Resources

Want to give it a shot yourself or read more about the techniques? We used these resources: